I was recently at the Educators Rising conference at Westminster College in Fulton. This conference is for high school students who are thinking about careers as teachers. I was presenting sessions about AI for students. At lunch, a couple of the students from one of my sessions approached me and said they wanted to understand more about the term “bias” in AI. So, first, I asked them what they thought it was.

They correctly identified one of the main causes of bias as being the data used in a model. They understood that if it is not completely representative of a topic of subject (for example, only pictures of pepperoni pizzas added to the model), either by choice or accidentally, it can lead to bias (every time you ask it for a picture of pizza, it is only is pepperoni).

But I asked them to do a little research with me and see if there were more ways bias could be introduced in AI systems. So we did a “lateral search”, with a couple of them doing web searches and one asking ChatGPT, about where and how bias can be introduced in AI models.

First, they were surprised that the AI tool itself would admit it had problems 😊

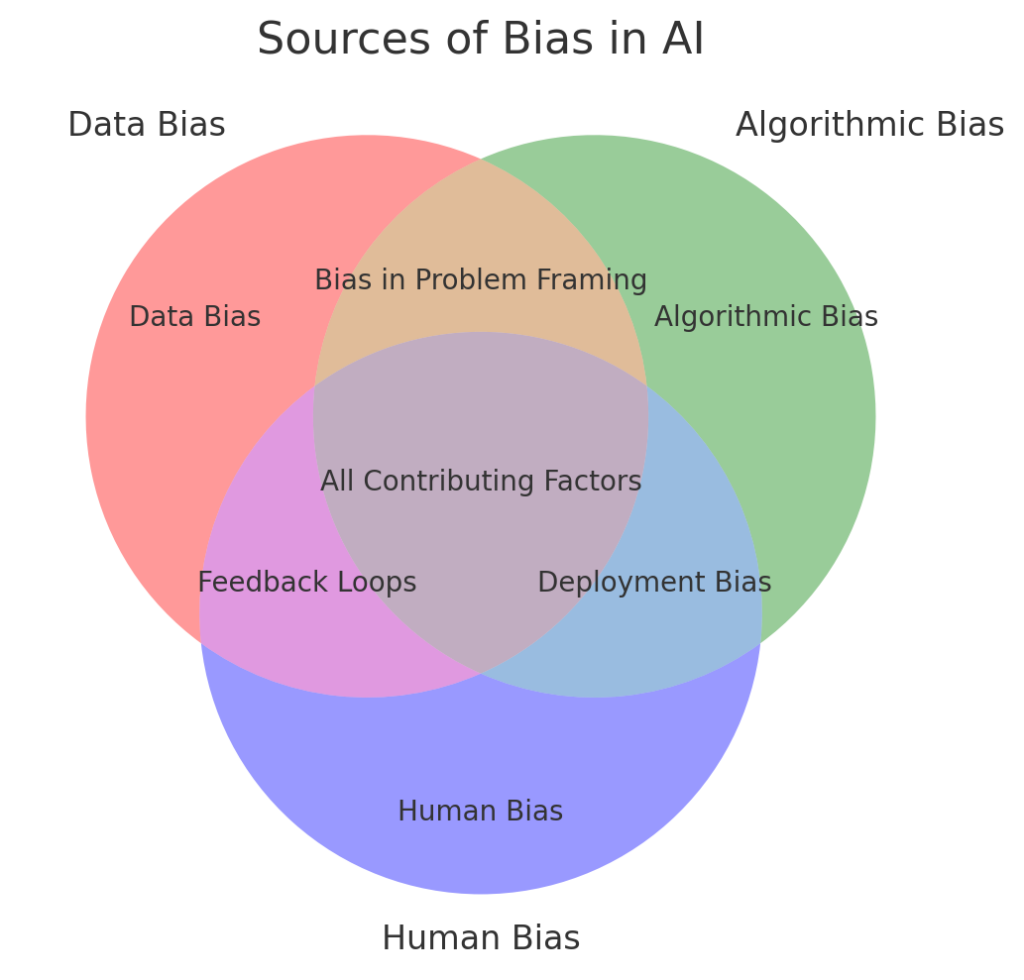

But, by asking a few questions and then prompting ChatGPT for a diagram, we got this:

This is not bad! We checked it against some online sources and, although it doesn’t have a ton of details, the students felt they understood it more that some other sources. Here is their combined (search and prompt) explanation of each of the above:

· Data Bias – The most well-known source. If the training data is unbalanced, incomplete, or reflects societal biases, the AI model will learn and replicate those biases.

· Algorithmic Bias – Even with balanced data, the way an AI model processes and interprets the data can introduce bias. Certain algorithms may favor specific patterns, leading to skewed outputs.

· Human Bias – AI is designed, trained, and fine-tuned by humans who may unintentionally introduce bias through choices like feature selection, labeling, or defining success metrics.

· Bias in Problem Framing – The way a problem is defined or structured can introduce bias. If the objective function or constraints favor one group over another, bias will persist even with fair data.

· Deployment Bias – Once deployed, AI systems can be used in unintended ways, leading to biased outcomes. If an AI is tested under controlled conditions but behaves differently in the real world, issues can emerge.

· Feedback Loops – AI systems that learn from user interactions can reinforce biases. For example, if a recommendation system suggests content based on previous interactions, it can amplify existing preferences and exclude diverse viewpoints.

They also pointed out that even though human bias is listed as a category, ChatGPT seems to “hint” or imply that it is the human in the loop that is the cause of most of the problems. Which is painfully accurate 😊.

Great group of kids and a fun interaction.